A cellular network or mobile network is a communications network where the last link is wireless. The network is distributed over

land areas called cells, each served by at least one fixed-location transceiver, known as a cell site or base station. In a cellular

network, each cell uses a different set of frequencies from neighboring cells, to avoid interference and provide guaranteed bandwidth

within each cell.

When joined together these cells provide radio coverage over a wide geographic area. This enables a large number of portable

transceivers (e.g., mobile phones, pagers, etc.) to communicate with each other and with fixed transceivers and telephones anywhere

in the network, via base stations, even if some of the transceivers are moving through more than one cell during transmission.

Cellular networks offer a number of desirable features:

More capacity than a single large transmitter, since the same frequency can be used for multiple links as long as they are in

different cells

Mobile devices use less power than with a single transmitter or satellite since the cell towers are closer

Larger coverage area than a single terrestrial transmitter, since additional cell towers can be added indefinitely and are not

limited by the horizon

Major telecommunications providers have deployed voice and data cellular networks over most of the inhabited land area of the Earth.

This allows mobile phones and mobile computing devices to be connected to the public switched telephone network and public Internet.

Private cellular networks can be used for research or for large organizations and fleets, such as dispatch for local public safety agencies or a taxicab company.

https://en.wikipedia.org/wiki/Cellular_network

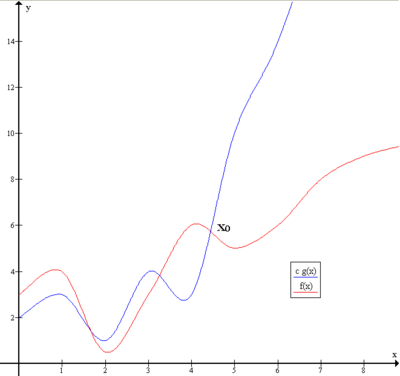

, there exists an input of M requiring more space than k.

, there exists an input of M requiring more space than k. be the set of all configurations of M on input x. Because M ∈ DSPACE(s(n)), then

be the set of all configurations of M on input x. Because M ∈ DSPACE(s(n)), then  = o(log n), where c is a constant depending on M.

= o(log n), where c is a constant depending on M. : if it is longer than that, then some configuration will repeat, and M will go into an infinite loop. There are also at most

: if it is longer than that, then some configuration will repeat, and M will go into an infinite loop. There are also at most  possibilities for every element of a crossing sequence, so the number of different crossing sequences of M on x is

possibilities for every element of a crossing sequence, so the number of different crossing sequences of M on x is